Remember that late-night talk show bit where an image of a political figure is shown with someone else’s mouth superimposed on top, in order to make them say dubious things? It always looked a little ropey, but that was part of the effect. Well, this new AI tool also takes still images of human subjects and animates the mouth and head movements, but this time the effect is surprisingly, almost worryingly convincing.

The tool is called EMO: Emote Portrait Alive, and it’s been developed by several researchers from the Institute for Intelligent Computing, part of the Alibaba Group. The tool takes a single reference image, extracts generated motion frames, and then combines them with vocal audio through a complex diffusion process in which the facial region is integrated with multi-frame noise samples and then de-noised while adding generated imagery to synch with the audio, eventually generating a video of the subject not only lip-synching, but also emoting various facial expressions and head poses.

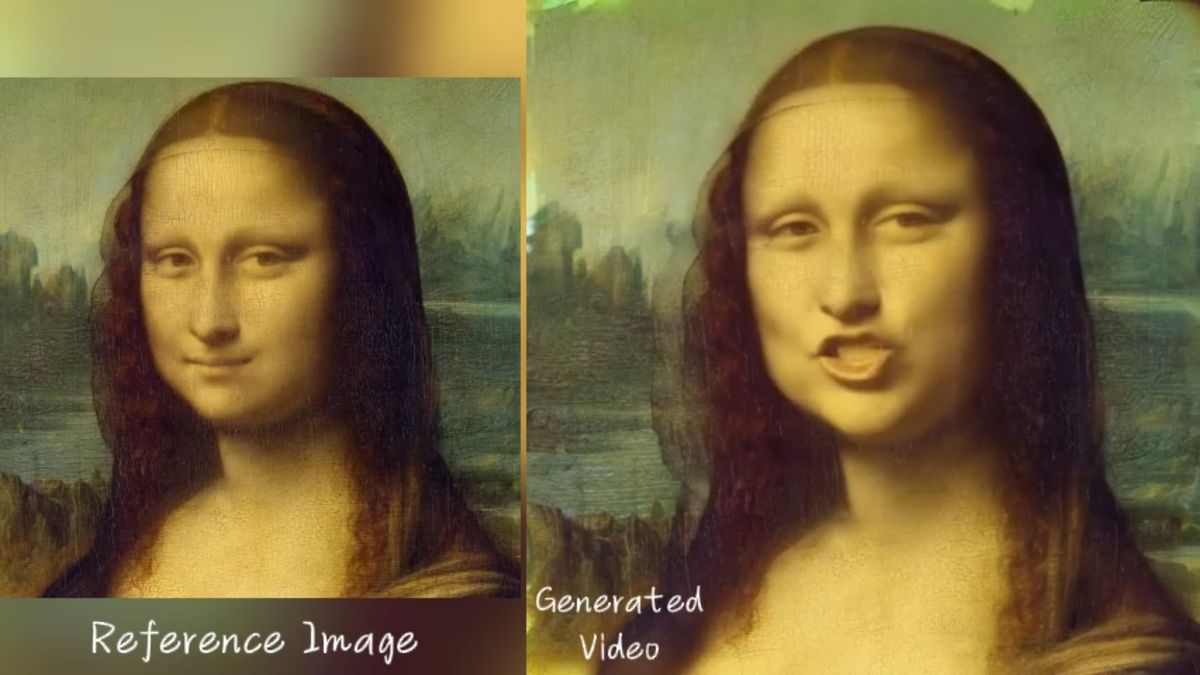

The technology is demonstrated using sample images of various figures ranging from real-life celebrities, to AI generated people, to the Mona Lisa, while the vocal audio used includes a Dua Lipa track, pre-recorded interview clips, and Shakespearian monologues. After the process has been applied the generated avatar appears to have come to life, mouthing and moving to the chosen audio.

The effect is surprisingly accurate, although it has to be said, far from perfect. “Buh” sounds sometimes appear to come from open mouths rather than closed lips, and the occasional syllable appears from clenched teeth, as if the avatar is resisting the AI’s insistence on bringing them to life to sing and perform for the internet.

See more

Still, it’s a remarkable effect, and one that’s likely to pass without notice from a casual observer unless they were told specifically to watch out for mouth movements and timing.

Thinking of upgrading?

Windows 11 review: What we think of the latest OS.

How to install Windows 11: Our guide to a secure install.

Windows 11 TPM requirement: Strict OS security.

Even more impressive is a later demonstration of what the company refers to as “cross-actor performance”. A clip shows Joaquin Phoenix in full make-up as the Joker, except this time with the audio of Heath Ledger’s interpretation of the character from The Dark Knight, including a reasonable approximation of Ledger’s trademark swallowing and lip smacking in the role.

While the technology is undoubtedly impressive, it’s likely to do little to dissuade the creeping notion that AI deepfake content, and all the nefarious purposes it can be potentially used for, is progressing at a remarkable rate.

While these videos make for excellent tech demonstrations, they are reminders that the difference between what we presume is real and what is computer generated is rapidly becoming harder to spot as image and video generation technology matures. AI tools can sometimes demonstrate a terrifying ability to churn out generated content at an incredible rate and with increasing complexity, and that has some troubling implications. Although perhaps that’s just me being a big old worrywart.

Will it not be long, I wonder, before our holiday snaps can be grabbed from our long defunct Facebook pages, to be turned by AI tools into videos of us mouthing songs we never sang? At least, that’s my excuse.

No, I did not drunkenly attempt karaoke in Cyprus. It’s an AI-enhanced fake, that one, I promise.